Overview

Test suites are hard, but your business needs to have one.

They are hard because modern applications are complex.

Technology can help you. There are tools that can simplify the task of creating a thorough test suite.

Does your main business have a regression test?

The likely answers to the above questions are:

- Yes. - Good chap. Keep up the good work. You may stop reading, but just out of curiosity, read on.

- No. I don't need one. - You may stop reading. Most likely your business is already doomed. :)

- No. I don't have time (don't know how) to make one. - Keep reading. There is something for you.

What's a

regression test? Is a useful watchdog that tells you if your software is working the way it was intended to.

If you don't have one, you have no quick and reliable way of telling if your application is safe.

If you think that you don't need a regression test suite, either you have found the formula of making perfect software, or your optimism is way off mark.

Tests are hard

If you have a test suite, you know that it requires hard work, dedication, cunning ways of thinking, technical abilities.

If your business includes a MySQL database, you may consider using its

specific test framework, which introduces yet another level of difficulty.

MySQL testing framework is designed to allow testing any database scheme (stand alone, replicated, clusters) with just one box, at the price of some language addition that you would need to learn.

Writing your own regression test that covers your application means replaying the data structure and the queries, to make sure that, if you alter the working environment, your application continues working as required.

The most likely scenario occurs when you need to upgrade. Your current server has a bug that was just fixed in a more recent one. You would like to upgrade, but before doing that you would like to be sure that the new environment is capable of running your queries without side effects.

A regression test would solve the problem. You set up a temporary server, install the upgraded software and run the test suite. If you don't get errors, you may confidently upgrade. It depends on how thorough is your test, of course. The more you cover, the more you can trust it.

If you don't have a regression test and you need to upgrade, you are in trouble. If there are inconsistencies between your application and the new version, you will find out when a user complains about a failure. Which is not the most desirable course of action.

Understanding the problem

Why making a test suite for an application is so hard? Mostly because you don't usually have a list of all queries running against your server. Even if you planned a modular application and all your database calls are grouped in one easy accessible part of your code, that won't solve your problem. Modern applications don't use static queries. Most queries are created on the fly by your application server, twisting a template and adding parameters from users input.

And even if your templates are really well planned, so that they leave nothing to imagination, you will have trouble figuring out what happens when many requests access your database concurrently.

Smarter applications can log all database requests and leave a trail that you can use to create your test. But more complex systems count more than one application, and taking trace of all their queries is a real headache.

Making tests easier

Fortunately, there is a central place where all queries go. Your server.

Unfortunately, the server does not create a test case for you. It just records all calls in the general log, and it that is not suitable for further consumption.

Here comes the technology I mentioned earlier. You can make a test script out of your general log.

- activate the general log;

- Download the test maker;

- Inspect the general log and identify the likely period when the most important queries occur;

- tell the test maker to read the above traffic (which you can identify by line number or by timestamp)

- edit the resulting test and see if covers your needs.

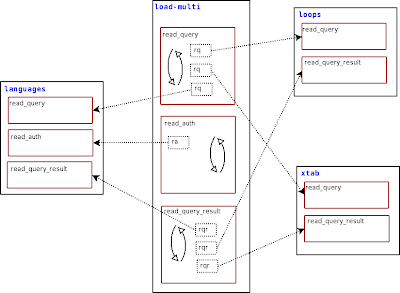

The test maker is a collection of two tools. One that can get a snapshot of your data (like mysqldump, but with a LIMIT clause, so that you can take only a sample of your data) and the test maker itself, which reads the general log and creates a script. You can tune this tool with several options.

Log Analyzer and test maker, version 1.0.2 [2007-06-10]

(C) 2007 Giuseppe Maxia, MySQL AB

Syntax: ./testing_tools/test_maker.pl [options] log_file test_file

--add_disable_warnings|w Add disable warnings before DROP commands. (default: 0))

--from_timestamp|t = name Starts analyzing from given timestamp. (default: undefined))

--to_timestamp|T = name Stops analyzing at given timestamp. (default: undefined))

--from_line|l = number Starts analyzing at given line. (default: first))

--to_line|L = number Stops analyzing at given line. (default: last))

--from_statement|s = name Starts analyzing at given statement. (default: first))

--to_statement|S = name Stops analyzing at given statement. (default: last))

--dbs = name Comma separate list of databases to parse. (default: all))

--users = name Comma separate list of users to parse. (default: all))

--connections = name Comma separate list of connection IDs to parse. (default: all))

--verbose Adds more info to the test . (default: 0 ))

--help Display this help . (default: 0 ))

As you can see from the options, you can filter the log by several combinations of connection ID, users list, timestamps, line numbers.

The resulting script is suitable for the MySQL test framework.

Advantages

The test maker is not a panacea, but it helps reducing your test suite development time. Running this tool you will get a passable starting test, which you can then refine to make it closer to your needs.

The main advantage is that you can have a set of instructions that were run against your production server, and you can replay against a testing server when you need to upgrade.

If some features of MySQL 5.1 look appealing and you plan to upgrade, then a test suite is the best way of making sure that the switch will be painless.

More than that, once you have a test suite, consider joining the

Community Testing and Benchmarking Program, a project to share test suites from the Community, so that your test case becomes part of MySQL AB suite. Thus, you won't be alone with your upgrade problems. If there are any regression failures, they will be addressed before the next version is released, for the mutual benefit of company and users.

Known issues

There are problems, of course.

The general log is not turned on by default. To switch it on, you need to restart the server, and to switch it off you will do that again (until you upgrade to MySQL 5.1, which has logs on demand).

Moreover, the general log can become huge, so you need additional maintenance, to avoid filling the whole disk with logs.

Furthermore, the general log will record everything sent to the server, even wrong queries. While this is desirable for a test case, because testing failures is as important as testing compliance, you will need to adjust manually the test script, adding "--error" directives.

Again, the test maker records only the test script, but not the result, which you will need to create using the testing framework.

All in all, having a tool writing a big chunk of the test script is much better than doing it all manually. So even if this is not a complete solution, it's a hell of a good start.

Comments welcome.