Previous episodes:

In the previous article, we saw the basics of establishing replication from multiple origins to the same destination. By extending that concept, we can deploy more complex topologies, such as the point-to-point (P2P) all-masters topology, a robust and fast way of moving data.

Figure 1 - Hierarchical replication depth of processing

Figure 1 - Hierarchical replication depth of processing

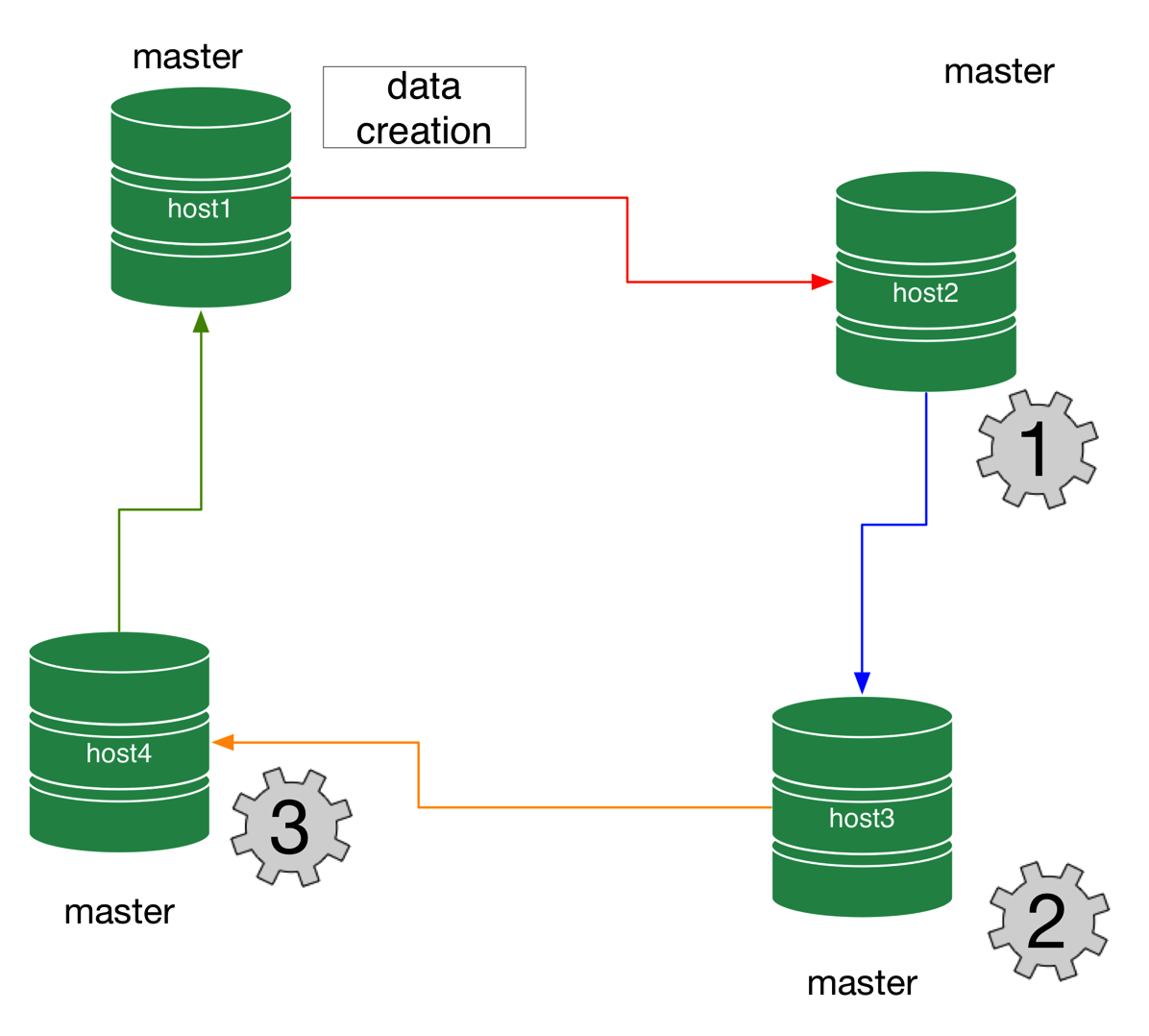

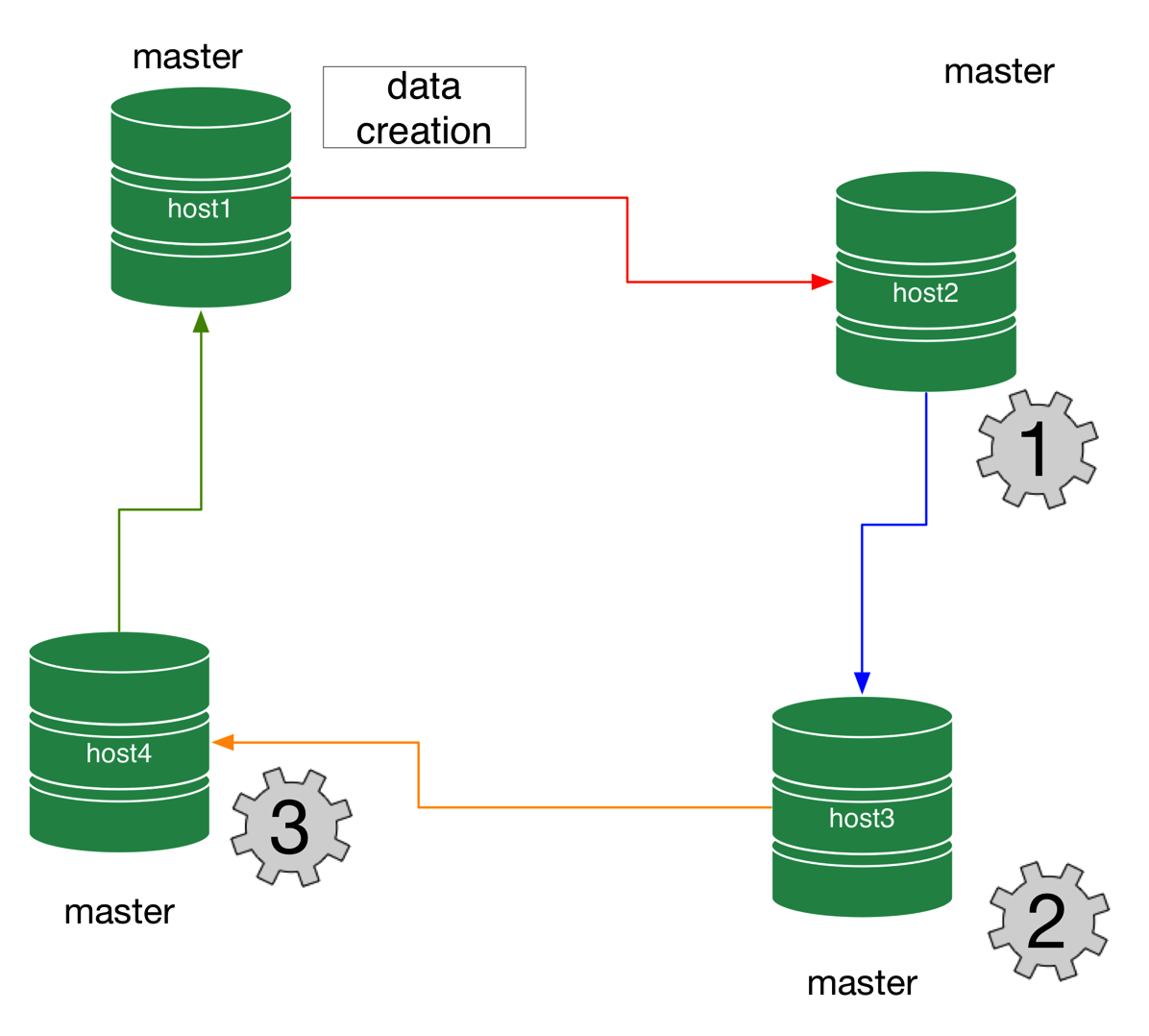

Figure 2 - Circular replication depth of processing

Figure 2 - Circular replication depth of processing

Why is this important? The number of steps affects performance, resilience, and, potentially, accuracy.

Figure 3 - All-masters P2P replication

Figure 3 - All-masters P2P replication

An all-masters P2P topology is a lot like fan-in topology, but with the number of nodes, masters, and slaves being the same. If all the nodes are fan-in slaves, and are also masters at the same time, every node can get data from the others and can send data at the same time.

Figure 4 - All-masters P2P replication depth of processing

Figure 4 - All-masters P2P replication depth of processing

In an all-masters P2P topology, each node replicates to every other node. Compared to circular replication, this deployment requires more connections per node (it's a small price to pay) but the data flows faster and more cleanly, as the origin of each transaction is easier to track.

As we did for fan-in topologies, we load the Sakila database in one of the nodes, to get some differences, and then look at the GTID situation:

Let's have a look inside:

And here we can see once more why it was a bad decision not to have a table for master status:

Monitoring this topology presents the same hurdles already seen for fan-in, increased by the number of connections. For a N-node deployment, we will need to monitor N*(N-1) channels.

The lack of a table for master status is felt more acutely in this topology, as the current data is more difficult to parse.

We can, however, compromise between the need of having many masters and the complexity of the deployment. We will see the star topology, where, by introducing a SPOF in the system we can deploy a more agile all-masters topology. And we will also see some hybrid deployments, all made possible by the multi-source enhancements in MySQL 5.7 and MariaDB 10.

- MySQL replication monitoring 101

- MySQL replication in action - Part 1: GTID & Co

- MySQL replication in action - Part 2 - Fan-in topology

In the previous article, we saw the basics of establishing replication from multiple origins to the same destination. By extending that concept, we can deploy more complex topologies, such as the point-to-point (P2P) all-masters topology, a robust and fast way of moving data.

Introduction to P2P all-masters topology

A P2P (Point-to-point) topology is a kind of deployment where replication happens in a single step from the producer to the consumers. For example, in a master/slave topology, replication from the master (producer) reaches every slave (consumer) in one step. This is simple P2P replication. If we use a hierarchical deployment, where every slave that is connected to the master is also replicating to one or more slaves, we will have a 2-step replication (Figure 1). Similarly, in circular replication, we have as many steps as the number of nodes minus one (Figure 2.) Figure 1 - Hierarchical replication depth of processing

Figure 1 - Hierarchical replication depth of processing Figure 2 - Circular replication depth of processing

Figure 2 - Circular replication depth of processingWhy is this important? The number of steps affects performance, resilience, and, potentially, accuracy.

- Performance depends on the number of steps. Before the final leaf of the topology graph gets the data, it will replicate N times, one for each step. In figure 1, host4 will be updated twice as slower as host2. In Figure 2, host4 will be three times slower than host2, as it has to wait for two steps before data reaches its tables.

- Resilience, or the capacity to withstand failures, also depends on the number of intermediate steps. Intermediate masters are single points of failure (SPOF) that can break a branch of the topology graph, or the whole deployment. In this context, a master/slave deployment has one SPOF; the topology in figure 1 has 2, and the circular replication has 4 of them.

- Accuracy can be different if the data goes from master to slave directly, compared to the data going through one or more intermediaries. If data is applied and then extracted again, its chances of reaching the final destination unchanged depend on the intermediate masters to have exactly the same configuration as its predecessors in the chain.

Figure 3 - All-masters P2P replication

Figure 3 - All-masters P2P replication An all-masters P2P topology is a lot like fan-in topology, but with the number of nodes, masters, and slaves being the same. If all the nodes are fan-in slaves, and are also masters at the same time, every node can get data from the others and can send data at the same time.

Figure 4 - All-masters P2P replication depth of processing

Figure 4 - All-masters P2P replication depth of processingIn an all-masters P2P topology, each node replicates to every other node. Compared to circular replication, this deployment requires more connections per node (it's a small price to pay) but the data flows faster and more cleanly, as the origin of each transaction is easier to track.

Deploying a P2P all-masters topology in MySQL 5.7

The procedure is the same that we have seen for fan-in replication, but with a few differences:- Every node needs to be a master, and therefore it must have binary logs configured;

- The procedure for connecting to the other nodes needs to be repeated for each node. In a N-node deployment, you will end up having, for each node, N-1 slave channels.

$ ./multi_source.sh 5.7.8 mysql ALL-MASTERS

installing node 1

installing node 2

installing node 3

installing node 4

group directory installed in $HOME/sandboxes/multi_msb_5_7_8

# server: 1:

# server: 2:

# server: 3:

# server: 4:

# option 'master-info-repository=table' added to node1 configuration file

# option 'relay-log-info-repository=table' added to node1 configuration file

# option 'gtid_mode=ON' added to node1 configuration file

# option 'enforce-gtid-consistency' added to node1 configuration file

# option 'master-info-repository=table' added to node2 configuration file

# option 'relay-log-info-repository=table' added to node2 configuration file

# option 'gtid_mode=ON' added to node2 configuration file

# option 'enforce-gtid-consistency' added to node2 configuration file

# option 'master-info-repository=table' added to node3 configuration file

# option 'relay-log-info-repository=table' added to node3 configuration file

# option 'gtid_mode=ON' added to node3 configuration file

# option 'enforce-gtid-consistency' added to node3 configuration file

# option 'master-info-repository=table' added to node4 configuration file

# option 'relay-log-info-repository=table' added to node4 configuration file

# option 'gtid_mode=ON' added to node4 configuration file

# option 'enforce-gtid-consistency' added to node4 configuration file

# executing "stop" on $HOME/sandboxes/multi_msb_5_7_8

executing "stop" on node 1

executing "stop" on node 2

executing "stop" on node 3

executing "stop" on node 4

# executing "start" on $HOME/sandboxes/multi_msb_5_7_8

executing "start" on node 1

. sandbox server started

executing "start" on node 2

. sandbox server started

executing "start" on node 3

. sandbox server started

executing "start" on node 4

. sandbox server started

# Setting topology ALL-MASTERS

# node node1

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8380, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node2'

START SLAVE for channel 'node2'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8381, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node3'

START SLAVE for channel 'node3'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8382, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node4'

START SLAVE for channel 'node4'

--------------

# node node2

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8379, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node1'

START SLAVE for channel 'node1'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8381, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node3'

START SLAVE for channel 'node3'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8382, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node4'

START SLAVE for channel 'node4'

--------------

# node node3

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8379, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node1'

START SLAVE for channel 'node1'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8380, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node2'

START SLAVE for channel 'node2'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8382, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node4'

START SLAVE for channel 'node4'

--------------

# node node4

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8379, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node1'

START SLAVE for channel 'node1'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8380, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node2'

START SLAVE for channel 'node2'

--------------

CHANGE MASTER TO MASTER_HOST='127.0.0.1', MASTER_PORT=8381, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_AUTO_POSITION=1 for channel 'node3'

START SLAVE for channel 'node3'

--------------

$HOME/git/mysql-replication-samples/test_all_masters_replication.sh -> $HOME/sandboxes/multi_msb_5_7_8/test_all_masters_replication.sh

$ ./test_all_masters_replication.sh

# NODE node1 created table test_node1

# NODE node2 created table test_node2

# NODE node3 created table test_node3

# NODE node4 created table test_node4

# Data in all nodes

101

1 101 8379 node1 2015-08-12 19:40:35

1 102 8380 node2 2015-08-12 19:40:35

1 103 8381 node3 2015-08-12 19:40:35

1 104 8382 node4 2015-08-12 19:40:35

102

1 101 8379 node1 2015-08-12 19:40:35

1 102 8380 node2 2015-08-12 19:40:35

1 103 8381 node3 2015-08-12 19:40:35

1 104 8382 node4 2015-08-12 19:40:35

103

1 101 8379 node1 2015-08-12 19:40:35

1 102 8380 node2 2015-08-12 19:40:35

1 103 8381 node3 2015-08-12 19:40:35

1 104 8382 node4 2015-08-12 19:40:35

104

1 101 8379 node1 2015-08-12 19:40:35

1 102 8380 node2 2015-08-12 19:40:35

1 103 8381 node3 2015-08-12 19:40:35

1 104 8382 node4 2015-08-12 19:40:35

As we did for fan-in topologies, we load the Sakila database in one of the nodes, to get some differences, and then look at the GTID situation:

$ for N in 1 2 3 4 ; do ./n$N -e 'select @@server_id, @@server_uuid; select @@global.gtid_executed\G'; done

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 101 | 18fd3be0-4119-11e5-97cd-24acf2bbd1e4 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 102 | 1e629814-4119-11e5-85cf-aac6e218d3d8 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 103 | 226e3350-4119-11e5-8242-de985f123dfc |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 104 | 270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

Let's have a look inside:

node1 [localhost] {msandbox} ((none)) > SHOW SLAVE STATUS\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 8380

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 1164948

Relay_Log_File: mysql-relay-node2.000002

Relay_Log_Pos: 1165161

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 1164948

Relay_Log_Space: 1165370

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 102

Master_UUID: 1e629814-4119-11e5-85cf-aac6e218d3d8

Master_Info_File: mysql.slave_master_info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set: 1e629814-4119-11e5-85cf-aac6e218d3d8:1-119

Executed_Gtid_Set: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

Auto_Position: 1

Replicate_Rewrite_DB:

Channel_Name: node2

*************************** 2. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 8381

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 891

Relay_Log_File: mysql-relay-node3.000002

Relay_Log_Pos: 1104

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 891

Relay_Log_Space: 1313

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 103

Master_UUID: 226e3350-4119-11e5-8242-de985f123dfc

Master_Info_File: mysql.slave_master_info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set: 226e3350-4119-11e5-8242-de985f123dfc:1-3

Executed_Gtid_Set: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

Auto_Position: 1

Replicate_Rewrite_DB:

Channel_Name: node3

*************************** 3. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 8382

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 891

Relay_Log_File: mysql-relay-node4.000002

Relay_Log_Pos: 1104

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 891

Relay_Log_Space: 1313

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 104

Master_UUID: 270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c

Master_Info_File: mysql.slave_master_info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set: 270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

Executed_Gtid_Set: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

Auto_Position: 1

Replicate_Rewrite_DB:

Channel_Name: node4

3 rows in set (0.00 sec)

And here we can see once more why it was a bad decision not to have a table for master status:

node1 [localhost] {msandbox} (mysql) > show master status\G

*************************** 1. row ***************************

File: mysql-bin.000002

Position: 891

Binlog_Do_DB:

Binlog_Ignore_DB:

Executed_Gtid_Set: 18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,

1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,

226e3350-4119-11e5-8242-de985f123dfc:1-3,

270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3

1 row in set (0.00 sec)

- Get the raw info: "18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3,1e629814-4119-11e5-85cf-aac6e218d3d8:1-119,226e3350-4119-11e5-8242-de985f123dfc:1-3,270c0ebe-4119-11e5-a1c9-b7fbc4e42c2c:1-3"

- Find the server UUID: "18fd3be0-4119-11e5-97cd-24acf2bbd1e4"

- Find the relevant GTID: "18fd3be0-4119-11e5-97cd-24acf2bbd1e4:1-3"

- Extract the GTID: "3"

Deploying a P2P all-masters topology in MariaDB 10

The installation is fairly similar to MySQL 5.7. We only see the same syntax differences already noted for fan-in topologies.$ ./multi_source.sh ma10.0.20 mariadb ALL-MASTERS

installing node 1

installing node 2

installing node 3

installing node 4

group directory installed in $HOME/sandboxes/multi_msb_ma10_0_20

# server: 1:

# server: 2:

# server: 3:

# server: 4:

# server: 1:

# server: 2:

# server: 3:

# server: 4:

# Setting topology ALL-MASTERS

# node node1

--------------

CHANGE MASTER 'node2' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19022, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node2'

--------------

CHANGE MASTER 'node3' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19023, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node3'

--------------

CHANGE MASTER 'node4' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19024, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node4'

--------------

# node node2

--------------

CHANGE MASTER 'node1' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19021, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node1'

--------------

CHANGE MASTER 'node3' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19023, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node3'

--------------

CHANGE MASTER 'node4' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19024, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node4'

--------------

# node node3

--------------

CHANGE MASTER 'node1' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19021, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node1'

--------------

CHANGE MASTER 'node2' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19022, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node2'

--------------

CHANGE MASTER 'node4' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19024, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node4'

--------------

# node node4

--------------

CHANGE MASTER 'node1' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19021, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node1'

--------------

CHANGE MASTER 'node2' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19022, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node2'

--------------

CHANGE MASTER 'node3' TO MASTER_HOST='127.0.0.1', MASTER_PORT=19023, MASTER_USER='rsandbox', MASTER_PASSWORD='rsandbox', MASTER_USE_GTID=current_pos

START SLAVE 'node3'

--------------

$ ./test_all_masters_replication.sh

# NODE node1 created table test_node1

# NODE node2 created table test_node2

# NODE node3 created table test_node3

# NODE node4 created table test_node4

# Data in all nodes

101

1 101 19021 node1 2015-08-12 20:20:46

1 102 19021 node2 2015-08-12 20:20:47

1 103 19021 node3 2015-08-12 20:20:47

1 104 19021 node4 2015-08-12 20:20:47

102

1 101 19022 node1 2015-08-12 20:20:46

1 102 19022 node2 2015-08-12 20:20:47

1 103 19022 node3 2015-08-12 20:20:47

1 104 19022 node4 2015-08-12 20:20:47

103

1 101 19023 node1 2015-08-12 20:20:46

1 102 19023 node2 2015-08-12 20:20:47

1 103 19023 node3 2015-08-12 20:20:47

1 104 19023 node4 2015-08-12 20:20:47

104

1 101 19024 node1 2015-08-12 20:20:46

1 102 19024 node2 2015-08-12 20:20:47

1 103 19024 node3 2015-08-12 20:20:47

1 104 19024 node4 2015-08-12 20:20:47

$ for N in 1 2 3 4; do ./n$N -e 'select @@server_id; select @@global.gtid_current_pos\G' ; done

+-------------+

| @@server_id |

+-------------+

| 101 |

+-------------+

*************************** 1. row ***************************

@@global.gtid_current_pos: 1020-102-119,1040-104-3,1030-103-3,1010-101-3

+-------------+

| @@server_id |

+-------------+

| 102 |

+-------------+

*************************** 1. row ***************************

@@global.gtid_current_pos: 1010-101-3,1040-104-3,1030-103-3,1020-102-119

+-------------+

| @@server_id |

+-------------+

| 103 |

+-------------+

*************************** 1. row ***************************

@@global.gtid_current_pos: 1010-101-3,1040-104-3,1020-102-119,1030-103-3

+-------------+

| @@server_id |

+-------------+

| 104 |

+-------------+

*************************** 1. row ***************************

@@global.gtid_current_pos: 1010-101-3,1030-103-3,1020-102-119,1040-104-3

node1 [localhost] {msandbox} ((none)) > SHOW ALL SLAVES STATUS\G

*************************** 1. row ***************************

Connection_name: node2

Slave_SQL_State: Slave has read all relay log; waiting for the slave I/O thread to update it

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 19022

Connect_Retry: 60

Master_Log_File: mysql-bin.000001

Read_Master_Log_Pos: 3230973

Relay_Log_File: mysql-relay-node2.000002

Relay_Log_Pos: 3231260

Relay_Master_Log_File: mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 3230973

Relay_Log_Space: 3231559

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 102

Master_SSL_Crl:

Master_SSL_Crlpath:

Using_Gtid: Current_Pos

Gtid_IO_Pos: 1020-102-119

Retried_transactions: 0

Max_relay_log_size: 1073741824

Executed_log_entries: 263

Slave_received_heartbeats: 3

Slave_heartbeat_period: 1800.000

Gtid_Slave_Pos: 1020-102-119,1040-104-3,1030-103-3,1010-101-3

*************************** 2. row ***************************

Connection_name: node3

Slave_SQL_State: Slave has read all relay log; waiting for the slave I/O thread to update it

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 19023

Connect_Retry: 60

Master_Log_File: mysql-bin.000001

Read_Master_Log_Pos: 882

Relay_Log_File: mysql-relay-node3.000002

Relay_Log_Pos: 1169

Relay_Master_Log_File: mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 882

Relay_Log_Space: 1468

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 103

Master_SSL_Crl:

Master_SSL_Crlpath:

Using_Gtid: Current_Pos

Gtid_IO_Pos: 1030-103-3

Retried_transactions: 0

Max_relay_log_size: 1073741824

Executed_log_entries: 14

Slave_received_heartbeats: 3

Slave_heartbeat_period: 1800.000

Gtid_Slave_Pos: 1020-102-119,1040-104-3,1030-103-3,1010-101-3

*************************** 3. row ***************************

Connection_name: node4

Slave_SQL_State: Slave has read all relay log; waiting for the slave I/O thread to update it

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 19024

Connect_Retry: 60

Master_Log_File: mysql-bin.000001

Read_Master_Log_Pos: 882

Relay_Log_File: mysql-relay-node4.000002

Relay_Log_Pos: 1169

Relay_Master_Log_File: mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 882

Relay_Log_Space: 1468

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 104

Master_SSL_Crl:

Master_SSL_Crlpath:

Using_Gtid: Current_Pos

Gtid_IO_Pos: 1040-104-3

Retried_transactions: 0

Max_relay_log_size: 1073741824

Executed_log_entries: 14

Slave_received_heartbeats: 3

Slave_heartbeat_period: 1800.000

Gtid_Slave_Pos: 1020-102-119,1040-104-3,1030-103-3,1010-101-3

3 rows in set (0.00 sec)

$ for N in 1 2 3 4; do ./n$N -e 'select @@server_id, @@gtid_domain_id; select * from mysql.gtid_slave_pos' ; done

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 101 | 1010 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1020 | 124 | 102 | 118 |

| 1020 | 125 | 102 | 119 |

| 1030 | 5 | 103 | 2 |

| 1030 | 6 | 103 | 3 |

| 1040 | 8 | 104 | 2 |

| 1040 | 9 | 104 | 3 |

+-----------+--------+-----------+--------+

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 102 | 1020 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1030 | 5 | 103 | 2 |

| 1030 | 6 | 103 | 3 |

| 1040 | 8 | 104 | 2 |

| 1040 | 9 | 104 | 3 |

+-----------+--------+-----------+--------+

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 103 | 1030 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1020 | 124 | 102 | 118 |

| 1020 | 125 | 102 | 119 |

| 1040 | 8 | 104 | 2 |

| 1040 | 9 | 104 | 3 |

+-----------+--------+-----------+--------+

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 104 | 1040 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1020 | 124 | 102 | 118 |

| 1020 | 125 | 102 | 119 |

| 1030 | 8 | 103 | 2 |

| 1030 | 9 | 103 | 3 |

+-----------+--------+-----------+--------+

Summing up

Using the methods already learned for fan-in deployments, an all-masters P2P topology is easy to install, albeit longer and more complex.Monitoring this topology presents the same hurdles already seen for fan-in, increased by the number of connections. For a N-node deployment, we will need to monitor N*(N-1) channels.

The lack of a table for master status is felt more acutely in this topology, as the current data is more difficult to parse.

What's next

This topology shows that we can deploy a very efficient multi-source replication system, at the expense of having many connections and enduring more complex monitoring data.We can, however, compromise between the need of having many masters and the complexity of the deployment. We will see the star topology, where, by introducing a SPOF in the system we can deploy a more agile all-masters topology. And we will also see some hybrid deployments, all made possible by the multi-source enhancements in MySQL 5.7 and MariaDB 10.

No comments:

Post a Comment